Discovery Mixes

Unlocking Carousell’s next wave of growth through mixed-method research and a dash of Lean

How do you tell your boss that the strategy that made the company big is now preventing it from getting bigger?

You don’t tell him. You show him.

In the past 8 years, Carousell went from Startup Weekend idea to become one of the world’s largest and fastest growing second hand marketplaces. It’s expansion across Southeast Asia, Taiwan and Hong Kong was a combination of organic growth through word-of-mouth and great user focus.

From Early Adopter to Power Users

All this was possible because Carousell always listened to their users, adapting its platform to the user’s needs. In its beginnings, this obsession with the user made the founders spend more time in flea-markets or talking with early adopters than coding at the office. Nowadays, this obsession is ingrained in all teams: from Customer Support and UX researchers, that gather user stories to Data Analysts and engineers, that enable hundreds of a/b tests when launching new features.

Small Cohort, powerful insights

Side by side, the amount of interactions generated by new users against the overall usage makes it very easy for product teams to overlook this cohort. But the Buyer Experience team had an intuition that a better understanding of them could unlock Carousell’s next wave of growth.

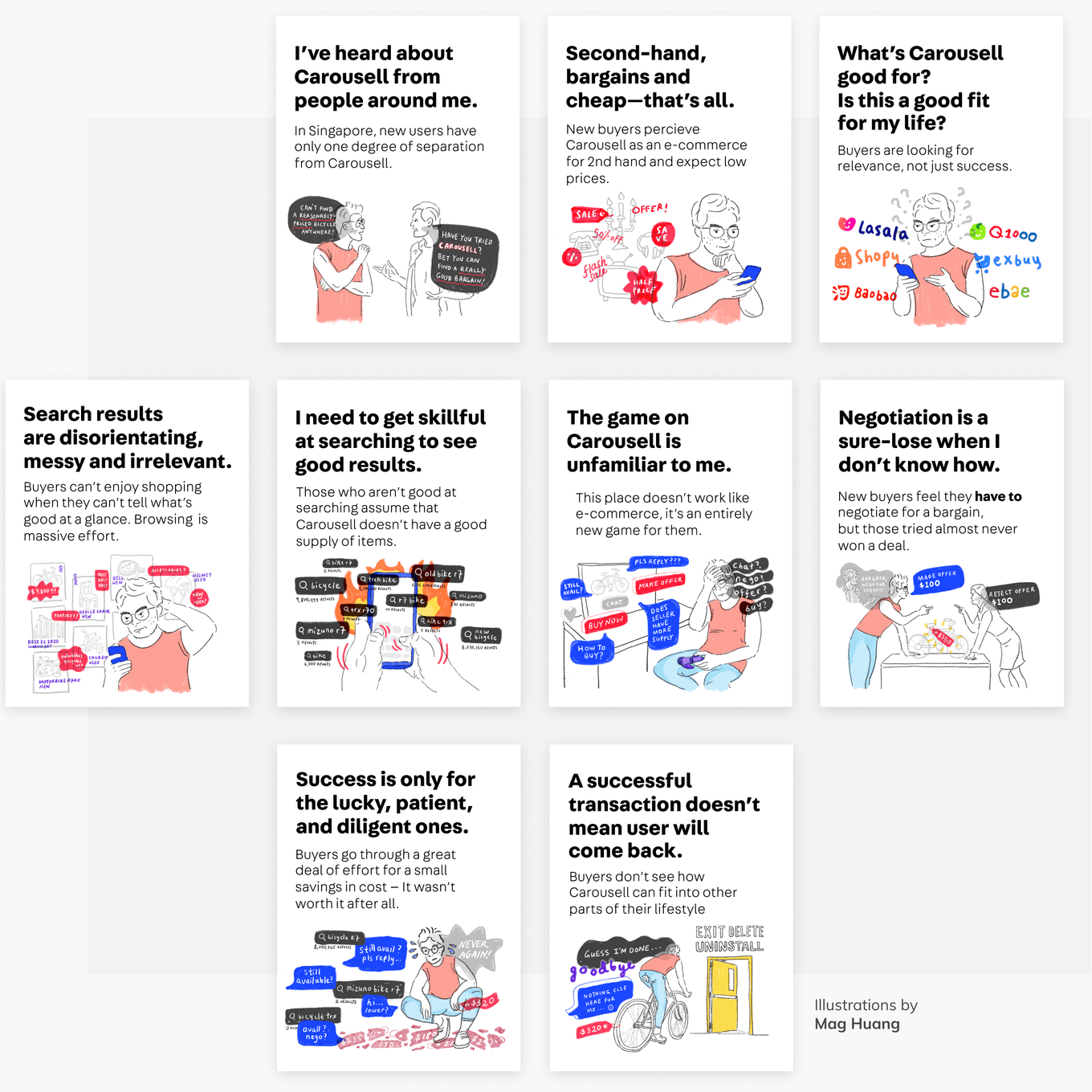

We decided to go deep and studied 70 new buyers. After a series of user interviews, reviews of interaction history, low-fi experiments and a follow up 3 months later, some patterns started to emerge. Below is a compilation of them:

From this understanding we decided to start by focusing on the top of the funnel and created our first big hypothesis/assumption:

New users that found a breadth of interest in Carousell tended to be retained and more engaged

When we looked back at all new users (not only the subjects of our study) the quantitative data supported our claim:

Users that viewed at least 1 listing from 5 different categories in their first month are active more than 5 times in the subsequent month (an average of 2 active days per week against 1.4 active days per month). But was it just a coincidence? Or, as our fellow data analysts would prefer to say, is it a correlation or a causation? Next step: validate it… the Lean way!

Wizard of Oz

The next piece of the puzzle was creating a lightweight experiment on the app home screen – I say lightweight because it did not require a single line of precious code!

We created a version of the home screen for new users that, instead of focusing on what’s popular (iPhones, Nike shoes, LVs and road bikes), we focused on diversity. Bringing up the unusual, the unexpected and also expert user’s hidden secrets – all manually added.

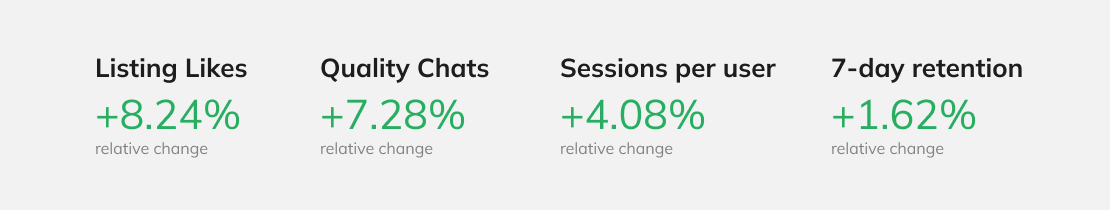

The impact of the experiment shouldn’t be expected to be significant, after all it was a limited change in the experience of the new users. In this A/B test, the treatment was available only for the first 5 active days. However, we were surprised to see some interesting results:

Proposed Solution

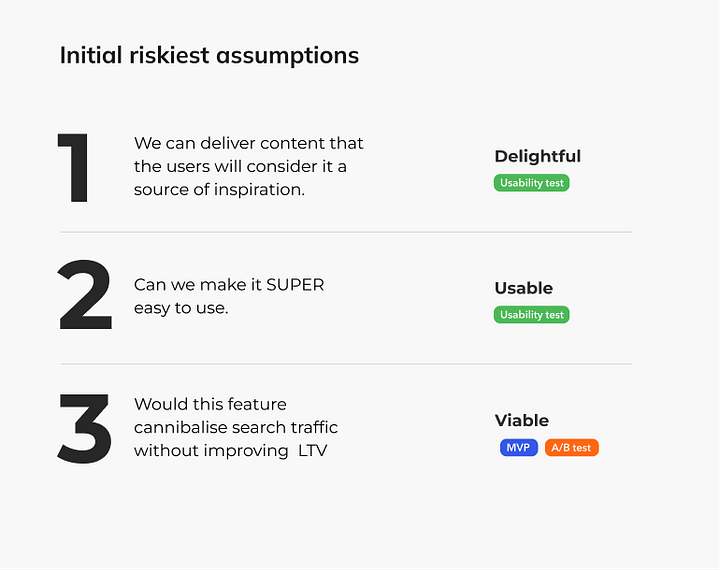

With our hypothesis validated, we received the green light for proposing a brand new flow to new users.

HMW

Enable a discovery experience with minimal friction that is both engaging and showcase the breadth of opportunities in the platform

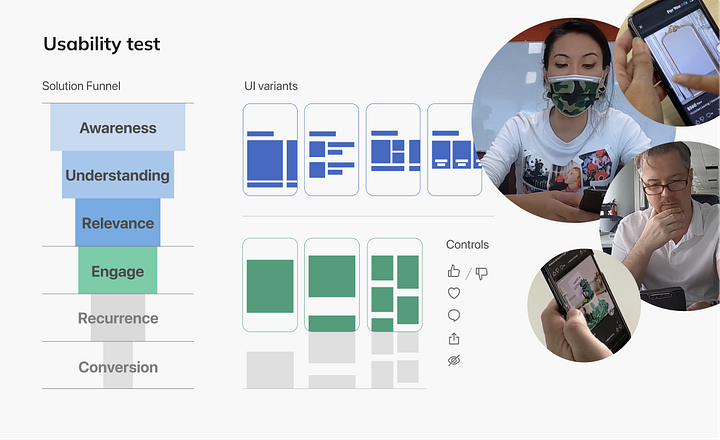

After a series of quick brainstorming sessions and rounds of user usability testing, the team defined and developed an complete new discovery flow. The focus of the solution was on exposing users to a wide range of listings with a simple UI while capturing real-time user feedback to further improve content relevance.

Early results

The first iteration improved listing likes and time spent but lacked statistical significance in 7-day retention. Post-launch research identified the need for a simpler entry point. Next step efforts focused on refining the ML algorithm based on concept testing insights and redesign the home screen section.

Reflections

Looking back, the team could have started the tech work on the algorithm earlier instead of waiting for most of the UX to be defined and tested. This would have allowed the team to have a better starting point for the suggestions model when testing the MVP.

The design team overlooked some friction points, such as the home screen entry point, which triggered a cascade effect on usage numbers. Developing more than one variant in this initial part of the funnel could have helped the team unveil a better solution.

On the other side, the BX team managed to pivot based on the validated learnings gathered during the process. Some of these learnings are now successfully applied not just to the new user cohort but to all users in the buyer experience.